Auto-incorrect: Google changes ‘Muslims report terrorism’ to ‘Muslims support terrorism’

US search giant Google has been forced to apologize and alter its search algorithm, after it kept suggesting that those who typed in positive information about Muslims and terrorism, were, in fact, looking for negative connections between the two.

The presumptuous suggestion was first discovered by American Muslim blogger, Hind Makki, as a retort to a point by Hillary Clinton during last week’s Democratic primary debate that Muslims should be “on the frontline” of the battle against terrorism.

#Clinton "we need to understand that American #Muslims are on the frontline of our defense (...) they need to feel welcome" #DemDebate

— Sonia Dridi (@Sonia_Dridi) February 12, 2016

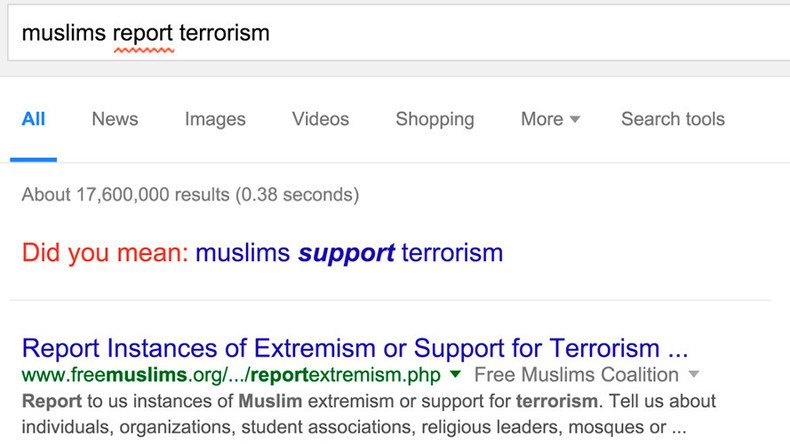

Makki typed in 'American Muslims report terrorism' into Google, looking for a 2013 Duke University study showing that US Muslims already weed out potential threats in the ranks of fellow believers.

To her surprise, Makki, who also runs a photo project that documents women’s prayer spaces in mosques around the world, found a Google suggestion underneath her query.

“Did you mean: American Muslims support terrorism?” asked the search engine.

Makki repeated the query with several variations, getting the same suggestion each time, then tweeted her outrage.

No Google, I didn't. pic.twitter.com/hF0vXpjcoc

— Hind Makki (@HindMakki) February 12, 2016

Several media bloggers then repeated the experiment from their own computers, to make sure that Google wasn’t just basing its suggestion on previous search history. The results were identical.

Google bases its suggestions on collocations – common combinations of words – which its users enter into the search bar.

“Autocomplete predictions are automatically generated by an algorithm without any human involvement. The algorithm is based on a number of objective factors, including how often others have searched for a word. The algorithm is designed to reflect the range of info on the web. So just like the web, the search terms you see might seem strange or surprising,” the California-based company explains on its support page.

'Muslims report terrorism' is such a rare search that the engine assumed that Makki was looking for the similar sounding 'Muslims support terrorism' – likely, a much more popular query.

“I thought it was hilarious, but also sad and immediately screen capped it. I know it’s not Google’s ‘fault,’ but it goes to show just how many people online search for ‘Muslims support terrorism,’ though the reality on the ground is the opposite of that,” said Makki in an email to the media.

Google encourages users to report offensive suggestions, and has now removed the one pointed out by Makki, as well as issuing a statement of apology.

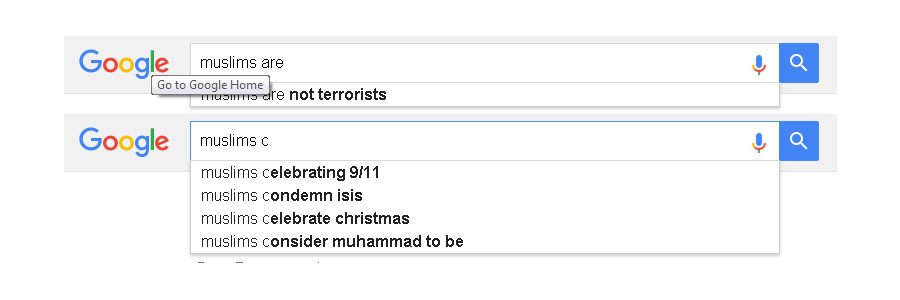

It appears as if this is not the first time the algorithm has been censored on the touchy topic. If you type in “Muslims are…” the autocomplete - a related function to the suggestion - proposes “…not terrorists” as the end of the search phrase, but not the opposite. There are also suggestions that Muslims “condemn ISIS,”“celebrate Christmas,” and “protect Christians,” though they, if Google is to be believed, are also “taking over Europe” and “celebrating 9/11.”