Return of physiognomy? Facial recognition study says it can identify criminals from looks alone

Two scientists from China's Shanghai Jiao Tong University have drawn ire from the scientific community after producing a study that claims to be able to tell a criminal from a law-abiding citizen in nine cases out of ten, based on a computer algorithm.

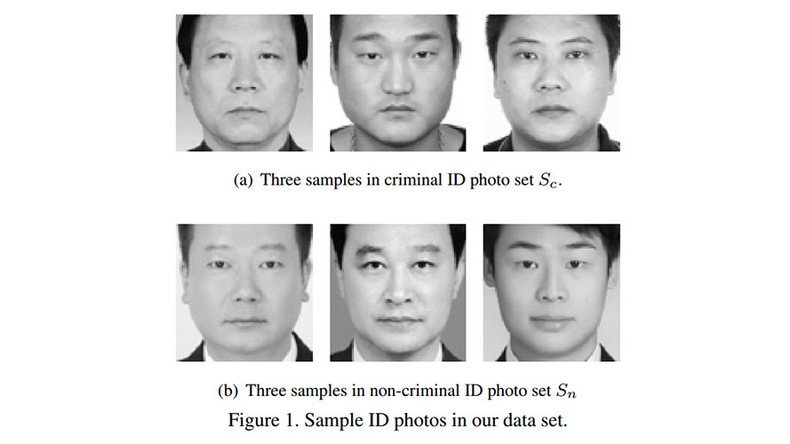

Xiaolin Wu and Xi Zhang “collected 1,856 ID photos that satisfy the following criteria: Chinese, male, between ages of 18 and 55, no facial hair, no facial scars or other markings,” 730 of whom were criminals, whose images were provided by the police.

The scientists then devised four different machine-learning algorithms, which were fed a proportion of the photo base, and told which ones were criminals, as a means by which to 'train' them. They were then given the remaining photos, and instructed to identify convicts without any additional cues.

“All four classifiers perform consistently well and produce evidence for the validity of automated face-induced inference on criminality, despite the historical controversy surrounding the topic,” wrote the authors of the paper, which has not yet been peer-reviewed, but has been made available online in the open-source journal arXiv.

The convolutional neural network (CNN) a “state-of-the-art” form of machine learning was able to identify the criminal correctly, in 89.5 percent of instances, a result “paralleled by all other three classifiers which are only few percentage points behind in the success rate of classification.”

The criminals appeared to have possessed some common physical qualities that helped the computer to identify them.

“We find some discriminating structural features for predicting criminality, such as lip curvature, eye inner corner distance, and the so-called nose-mouth angle,” says the paper.

Researchers found that convicts, who included both serious and petty criminals, had their eyes closer together, and their upper lip was most curved.

But the most prominent telling factor was not in any specific difference, but that criminals appeared to have faces that differed greatly from the norm, and from each other – that they were literally, in their appearance, deviants.

“The faces of general law-biding public have a greater degree of resemblance compared with the faces of criminals, or criminals have a higher degree of dissimilarity in facial appearance than normal people,” wrote the authors.

‘Dangerous pseudoscience’

Xiaolin Wu and Xi Zhang attempt to preempt some potential criticism by saying that “we intend not to nor are we qualified to discuss or debate on societal stereotypes” while their program propagates “no biases whatsoever due to past experience, race, religion, political doctrine, gender, age, etc.” but have still come under fire.

“I‘d call this paper literal phrenology, it’s just using modern tools of supervised machine learning instead of calipers. It’s dangerous pseudoscience,” Kate Crawford, an AI researcher with Microsoft Research New York, MIT, and NYU told The Intercept.

Others have said that the study does illustrate something, just not what the authors claim.

"This article is not looking at people's behaviour, it is looking at criminal conviction," Susan McVie, professor of quantitative criminology at the University of Edinburgh, told the BBC.

"The criminal justice system consists of a series of decision-making stages, by the police, prosecution and the courts. At each of those stages, people's decision making is affected by factors that are not related to offending behaviour – such as stereotypes about who is most likely to be guilty.”

"Research shows jurors are more likely to convict people who look or dress a certain way. What this research may be picking up on is stereotypes that lead to people being picked up by the criminal justice system, rather than the likelihood of somebody offending."

The findings of the study are not entirely unique – a 2011 Cornell experiment showed that people were capable of telling apart convicted criminals based on their instincts alone, when given a photo.

But the ability to label people instantaneously through a computer, which can be easily paired up with increasingly accessible mass facial recognition technology that is being implemented by governments worldwide, means that a database of millions “criminal types” can be created within days, without any evidence of wrongdoing. These people can then be monitored, questioned or even prosecuted, on the basis of their appearance alone.

This paper is the exact reason why we need to think about ethics in AI. https://t.co/U6J3I62VrN

— Stephen Mayhew (@mayhewsw) 17 November 2016

"Using a system like this based on looks rather than behaviour could lead to eugenics-based policy-making," said McVie. "What worries me the most is that we might be judging who is a criminal based on their looks. That sort of approach went badly wrong in our not-too distant history."