On February 15, Amnesty International published a report exposing how the New York Police Department has constructed a vast metropolis-spanning surveillance network heavily reliant on highly controversial facial recognition technology (FRT), which serves to “reinforce discriminatory policing against minority communities.”

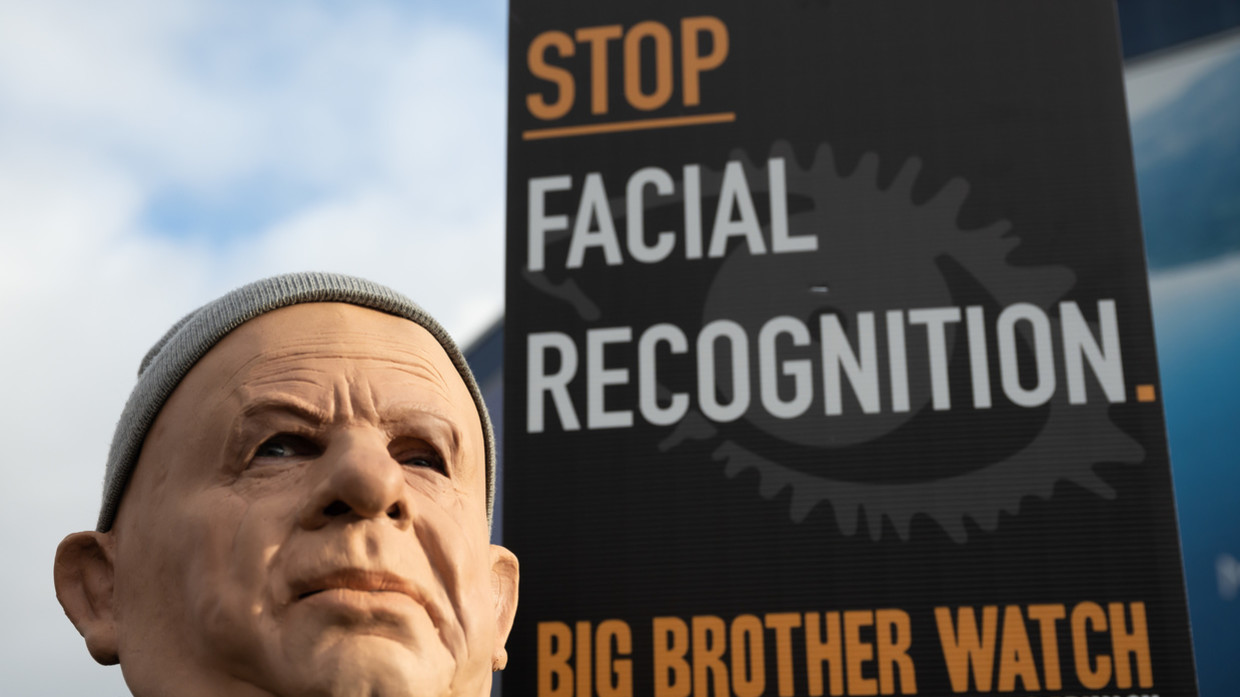

Once a science fiction staple, the Orwellian technology is quickly becoming normalized, wholeheartedly embraced by police forces up and down the nation. FRT allows police to compare CCTV imagery and other sources with traditional photographic records, as well as databases of billions of headshots, some of which are crudely pulled from individuals’ social media profiles without their knowledge or consent. The NYPD is a particularly enthusiastic user – or, perhaps, abuser – of FRT, with 25,500 cameras spanning the city today.

There is also a clear racial component to FRT deployment in New York – Amnesty found that in areas where the proportion of non-white residents is higher, so too is the concentration of FRT-equipped CCTV cameras. As such, the organization argues it has supplanted traditional ‘stop-and-frisk’ operations by law enforcement.

Between 2002 and 2019, innocent New Yorkers were searched and questioned in public by law enforcement over five million times. This reached a peak in 2011, a year during which almost 700,000 people were stopped and frisked. While the tactic’s usage has fallen significantly in the years since, the number of FRT-equipped surveillance devices in the city has multiplied significantly in the same period.

And all too often, the technology is used to “identify, track and harass” protesters. For example, analysis of routes to Black Lives Matter demonstrations in mid-2020 typically taken by attendees revealed “nearly total surveillance coverage” by FRT-equipped cameras. In August that year, FRT was used to track down a protester who’d committed the egregious crime of yelling near a law enforcement official, leading to over 50 officers surrounding his apartment and shutting down the surrounding streets, while NYPD helicopters hovered menacingly overhead.

In sum, Amnesty judges New York’s FRT network to “violate the right to privacy, and threaten the rights to freedom of assembly, equality and non-discrimination.” While unambiguously shocking, its report represents the tip of the iceberg in respect of FRT usage by the NYPD – not least because the department refused to disclose public records regarding its acquisition of FRT and other surveillance tools in response to Amnesty’s requests, prompting the rights group to take legal action, which remains ongoing as of February 2022.

Much of New York City’s modern surveillance infrastructure falls under the Domain Awareness System (DAS), conceived following the 9/11 attacks – the NYPD is supported in this effort by partnerships with surveillance camera company Pelco, tech giant Microsoft, and the FBI’s counterterrorism wing.

Events such as the attempted 2010 Times Square police car bombing provided justification for an even more expansive FRT rollout, meaning that today, local forces in all of New York’s five boroughs have carte blanche access to databases containing extensive data on citizens and vehicle registration plates, among other things.

Even more troublingly, emails obtained by MuckRock testify to an intimate relationship between the NYPD and Clearview AI. The firm’s artificial intelligence applications allow police to upload images of suspects and compare them to a 10 billion-strong database of facial images scraped from the web, including public websites and social media accounts without adequate privacy settings.

Clearview’s website boasts of its ability to provide police with customized mugshot and watchlist galleries and facilitate collaboration with other agencies on a regional and national level. Its quest to become the centralized source of facial recognition imagery has been furthered by over 1,800 public agencies testing or using it. Beyond police forces, the technology’s tentacles reach schools, hospitals, immigration, and the Air Force, among a great many others.

The company features prominently in a 2021 Government Accountability Office review of FRT use, which found that 18 out of 24 US federal agencies – a total which didn’t even include intelligence services, such as the CIA – employed FRT systems in 2020 for purposes including cyber security, domestic law enforcement, and surveillance.

Six (the Departments of Homeland Security, Justice, Defense, Health and Human Services, Interior, and Treasury) reported using the technology “to generate leads in criminal investigations, such as identifying a person of interest by comparing images of the person against databases of mugshots or from other law enforcement encounters.”

Numerous well-promoted studies have vouched for the immense precision and efficacy of FRT – research published in April 2020 by the Center for Strategic and International Studies, stated the best FRT systems have “near-perfect accuracy” in “ideal conditions.”

Of course, as the think tank also acknowledged, real-world conditions are almost never so ideal – a cited experiment showed that a “leading” FRT algorithm’s error rate leaped from an alleged 0.1% to 9.3% when matching pictures of individuals in which the subject was not looking directly at a camera or obscured by objects or shadows.

This is particularly concerning given you are not only more likely to be captured by FRT if you are a minority, but also more likely to be misidentified by the technology. Investigations by Harvard and NIST have found this isn’t just a case of more surveillance meaning more false positives, but that it does a markedly worse job on this in particular. There are numerous cases of people of color being wrongfully arrested – and serving jail time – due to FRT errors in multiple states.

Clearly, the NYPD is unmoved by such findings, and the force isn’t unique in this regard. The LAPD, which has been notoriously embroiled in countless high profile racial scandals over the years, and dubbed “the single most murderous police force in the country,” continues to budget for mass use of FRT despite hundreds of emails and a signed letter from 70 organizations in opposition.

Given this demand, FRT is a fast-expanding growth industry today. Valued at $3.72 billion in 2020, research firm Mordor Intelligence forecasts the market will balloon to almost $12 billion by 2026, a compound annual growth rate of 21.71%. What’s more, the Covid-19 pandemic has compelled vendors to greatly enhance the technology’s capabilities, with Chinese providers creating applications to identify infected citizens, and even those wearing masks – a frequent means by which protesters choose to obscure themselves.

Such largesse grants the FRT industry a sizable lobbying budget. The suggestions follow a recent quantum leap in corporate interest in face recognition legislation. A November 2020 review of congressional lobbying filings shows that mentions of the technology jumped more than four-fold 2018-2019 alone. It’s surely no coincidence IBM and Microsoft – two major FRT contractors – issued public statements upon Joe Biden’s election as president, willing the incoming administration to craft facilitative regulations governing face recognition.

Still, not everyone is sold on FRT. For example, the cities of Oakland, Portland, San Francisco, and Seattle have imposed blanket bans on the technology, prohibiting its use by any government agency within their jurisdictions. Several state legislatures are also conducting official investigations into FRT, and the nefarious purposes to which the data it collects and stores are put. At the start of 2021, New Jersey’s attorney general put a moratorium on Clearview’s use by police and announced an investigation into “this product or products like it.”

Similarly, at the start of February, the Internal Revenue Service caved to enormous public pressure and cancelled plans to use facial recognition to confirm the identities of Americans using its website to pay taxes or access documents.

By contrast, New York Mayor Eric Adams has forcefully asserted his determined commitment to even further expanding the city’s FRT capabilities on numerous occasions since winning office in November 2021. In late January at a press conference announcing the release of a “blueprint to end gun violence,” he pledged to “move forward on using the latest in technology to identify problems, follow up on leads and collect evidence.”

“From facial recognition technology to new tools that can spot those carrying weapons, we will use every available method to keep our people safe,” the retired police captain-turned politician said. “If you’re on Facebook, Instagram, Twitter – no matter what, they can see and identify who you are without violating the rights of people… It’s going to be used for investigatory purposes.”

The blueprint stresses that “new technology” will be used in a “responsible” manner, simply to “identify dangerous individuals and those carrying weapons,” and never “the sole means” upon which arrests are based, “but as another tool as part of larger case-building efforts.” It’s difficult to see how a blanket, indiscriminate bulk information harvesting mechanism could ever be used responsibly or quite so specifically, although reference to its wider role in “case-building” and “investigatory” efforts is certainly striking.

One key method by which spying agencies build dossiers on targets is through extensive surveillance and cultivation of their friends, family, and associates – infiltration, covert and overt, of wider networks surrounding them. It’s no doubt for this reason that since the early 1960s, the CIA has played a central role in developing FRT. Langley’s never-ending quest to master this disquieting art may account for its ever-increasing public presence.

After all, what better way to legitimize invasive, civil liberty-infringing technology than normalize it via widespread, everyday usage? The Detail will explore this burning question, among many others, in the next instalment of this investigative series. Stay tuned – there’s more. Much more.