When human troops are replaced by robots on the battlefield, it won’t be because the Pentagon's had some revelation about the value of human life – it’ll be an effort to defuse anti-war protests by minimizing visible casualties.

US military commanders are itching to get their hands on some killer robots after an Army war game saw a human-robot coalition repeatedly rout an all-human company three times its size. The technology used in the computer-simulated clashes doesn’t exist quite yet – the concept was only devised a few months ago – but it’s in the pipeline, and that should concern anyone who prefers peace to war.

Also on rt.com ‘Linked to white supremacy’: Paper celebrating Google’s quantum computer SOUNDS RACIST, say SJW scientists“We reduced the risk to US forces to zero, basically, and were still able to accomplish the mission,” Army Captain Philip Belanger gushed to Breaking Defense last week, after commanding the silicon soldiers through close to a dozen battles at Fort Benning Maneuver Battle Lab. When they tried to fight an army three times their size again without the robotic reinforcements? “Things did not go well for us,” Belanger admitted.

What could go wrong?

So why shouldn’t the US military save its troops by sending in specially-designed robots to do their killing? While protecting American lives is one reason to oppose the US’ ever-metastasizing endless wars, it’s far from the only reason. Civilian casualties are already a huge problem with drone strikes, which by some estimates kill their intended target only 10 percent of the time. Drones, an early form of killer robot, offer minimal sensory input for the operator, making it difficult to distinguish combatants from non. Soldiers controlling infantry-bots from afar will have even less visibility, being stuck to the ground, and their physical distance from the action means shooting first and asking questions later becomes an act no more significant than pulling the trigger in a first-person-shooter video game.

Any US military lives saved by using robot troops will thus be more than compensated for by a spike in civilian casualties on the other side. This will be ignored by the media, as “collateral damage” often is, but the UN and other international bodies might locate their long-lost spines and call out the wholesale slaughter of innocents by the Pentagon’s death machines.

Worse, having no “skin in the game” – literally, in this case – means one of the primary barriers that once stopped the US from starting wars with whatever country refused to bend to its will is lowered. The US has not picked a fight with a militarily-equal enemy in over half a century, but robots capable of increasing the might of the Pentagon’s military force ninefold will seriously shift the balance of power in Washington’s favor. The country’s top warmongers will be fighting amongst themselves over whom to strike first, reminding apprehensive citizens that it’s not as if their sons and daughters will be put at risk – unless the US’ chosen target just happened to lob a bomb overseas in retaliation.

The chilling possibility that the avoidance of home-team casualties would open the door to all the wars the US has ever wanted to fight, but not had the military capacity for, runs up against the fact that Washington – already over $22 trillion in debt – can’t possibly afford to further expand its already monstrous military footprint. Yet the Pentagon never runs out of money – it added $21 billion to its budget this year, and has never been told there’s no funding for even the most ill-advised weapons program. So while money might be a logical protection against the endless expansion of endless war, in the US, the Fed will just print more.

Yes, it could get worse

The Fort Benning experiment already hints at a dystopian future of endless war, but the tech used to win those simulated skirmishes is positively quaint compared to other technology in the pipeline. Autonomous killer robots – bots that select and kill their own targets using artificial intelligence – are the logical endpoint of the mission to dehumanize war completely, filling the ranks with soldiers that won’t ask questions, won’t talk back, and won’t hesitate to shoot whoever they’re told to shoot.

The pitfalls to such a technology are obvious. If AI can’t be trained to distinguish between sarcasm and normal speech, or between a convicted felon and a congressman, how can it be trusted to reliably distinguish between civilian and soldier? Or even between friend and foe?

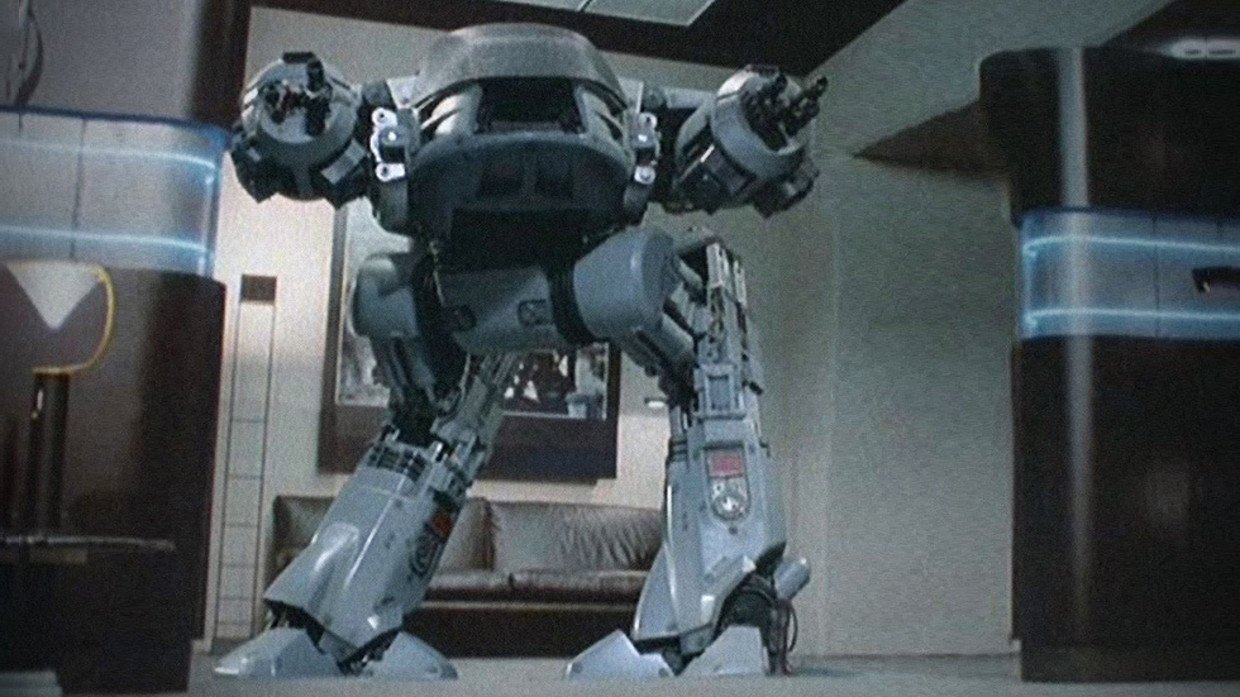

Everyone has seen robots bring about the apocalypse on-screen, but speculative fiction is starting to infringe on reality when real-world bright minds like Elon Musk and Stephen Hawking raise the alarm that AI can spell humanity's doom, their warnings echoed by dozens of think pieces in tech media outlets.

The first human soldier to die by a robot comrade’s “friendly fire” will no doubt be framed as an accident, but who will be held responsible in the event of a full-on robot mutiny? Does anyone remember the plot of Terminator, or the Matrix?

Also on rt.com 'Direct threat to peace': China raises alarm as US Space Force receives first fundingThe warbot-minded military is aware of the bad publicity. A recent Pentagon study (focusing on robotically-enhanced humans but applicable to robotic soldiers as well) warns that it’s better to “anticipate” and “prepare” for the impact of these technologies by crafting a regulatory framework in advance than to hastily impose one later, presumably in reaction to some catastrophic robot mishap. In order to have their hands free to develop the technology as they see fit, military leaders should make an effort to “reverse the negative cultural narratives of enhancement technologies,” lest the dystopian narratives civilians carry in their heads spoil all the fun.

Meanwhile, the Campaign to Stop Killer Robots, a coalition of anti-war groups, scientists, academics, and politicians who’d rather not take a 'wait and see' approach to a technology that could destroy the human race, are calling on the United Nations to adopt an international ban on autonomous killing machines. Whose future would you prefer?

Like this story? Share it with a friend!

The statements, views and opinions expressed in this column are solely those of the author and do not necessarily represent those of RT.