The UK state’s desire to keep tabs on us via smartphone apps is sinister. Fortunately, it’s turning into another Covid-19 cock-up

The UK government has rolled out an app to help trace people at risk of developing the virus. But their centralised approach - never mind concerns about the technology and privacy - means it’s unlikely to work at all.

With many countries starting to come out of lockdown, a route back to something like normality might be a ‘test, track and trace’ system. However, by not paying sufficient attention to privacy and technical concerns, the UK government seems to be making a mess of it.

Here's how it's supposed to work. We test people for Covid-19 or they could report symptoms which look like they might have the virus. If they are diagnosed with it, we ensure that they and everyone they have had contact with isolates themselves for two weeks. That should quickly cut off the chain of transmission.

Also on rt.com UK spy agency handed extra powers to access info from NHS IT systems during Covid-19 pandemicNormally, this would be done by asking an infected person who they have been in contact with and letting those people know they could be infected, too. That's easy enough with sexually transmitted diseases - our partners are hopefully memorable - but with a highly infectious disease like Covid-19, that would be less reliable and very laborious.

The rise of the smartphone offers the possibility of automated contact tracing. The technology appears to be viable, if not without complications. Millions of people would need to install an app, which sends out an anonymous code via Bluetooth, which can be received by nearby phones, and also records codes sent from those phones. The upshot is that each phone has a database of who they've been close to.

Here’s the central(ised) problem

If the app user is diagnosed with Covid-19, they can then send the mini-database of contacts up to a centralised server. They will be alerted and asked to isolate themselves for up to 14 days. In theory, if it all worked, people would be isolated from society before they had the chance to pass the disease on, quickly suppressing the prevalence of the virus.

The trouble is that the way the UK is doing all this in a different way to many other countries. From the narrow view of public health, the UK approach has some merits. But for reasons of technology, human rights law and privacy, it is more questionable and - most important - may very well not work at all. It’s had a very poor start during its trial on the Isle of Wight this week.

Are we facing another Covid cock-up because of the desire of UK health officials to be in control?

Essentially, there are two different ways for getting the app to work. Both demand that when someone is diagnosed with Covid-19, their data is uploaded to a central database. The crucial part is what that data contains and how it is processed.

In a model called Decentralized Privacy-Preserving Proximity Tracing (DP-3T), each phone using the app regularly downloads a report of the codes associated with a confirmed infection. If one of those codes matches the code in the phone's own database, the person should isolate themselves.

All the work of matching codes takes place on the user's phone, not on the central server.

That way, nobody knows who owns which phone, or which phone is associated with an infection. According to Google, DP-3T “heavily inspired” their own protocol agreed with Apple.

Fears of ‘mission-creep’

In the NHS model, however, the process of code-checking happens on its central server. Users also need to provide the first four characters of their postcode, too. This is not, on the face of it, a malicious attempt to grab more data. Having a bit more information that could help with modelling the spread of the virus and identifying people - anonymously - who could spread the virus more easily. The central health team wouldn't be able to associate that data with a particular person, but they could rate interactions with those people as more risky and possibly tell more people to isolate themselves than they would with a less-risky contact.

The only point when a person is ‘revealed’ as a Covid-19 case is if they have been diagnosed with the disease and request a test. Otherwise, it is anonymous and their contacts can remain anonymous unless they also ask for a test.

The NHS model does seem to have made very serious attempts to protect privacy, although there will still be reasonable doubts about how governments handle any data about us. There is always a danger of ‘mission creep’, where data is used for another purpose beyond the original one intended.

Will 80 percent of smartphone users sign up?

The trouble for the NHS is that Apple and Google have a very large say in all this and - perhaps to the astonishment of many people with experience of their business models - they are taking a hard line on personal privacy.

The vast majority of smartphones (well over 99 percent) use either Google's Android operating system or Apple's iOS. So what those companies say matters. And mindful of the track record of governments on privacy, they have decided that they will only allow tracing apps to use Bluetooth “in the background” if they adhere to a decentralised model. Indeed, they now call their project “exposure notification” rather than “contact tracing.”

Also on rt.com Google & Apple set some lucky programmers up for lucrative monopoly with new rules for contact-tracing appThe NHS tech experts say they have a workaround so their app will work without needing Bluetooth to run in the background. Most commentators remain unconvinced.

There are even more hurdles to jump, even if the NHS app gets over these technical barriers. First, you need a sizeable proportion of the population to take part. Since many people don't own a smartphone, it is estimated that 80 percent of smartphone users would need to download and use the app. So the whole thing needs to be very easy to install and use - and all those users need to be confident about what is happening to their data.

Second, even if enough people use the app, it will quickly become discredited if it doesn't work correctly. If there are a lot of false positives with it, users will simply stop isolating themselves in response to a request from the app to do so.

And the CIA’s somehow involved, too?

How will the app judge if someone has been in close enough contact with a virus carrier? Spending an hour within 10 metres of each other is unlikely to allow for transmission; spending a few seconds talking within a metre or so might well be enough to pass the virus on. But it's not necessarily that easy. For example, how can the app know that you were very close to each other, but either side of a perspex screen?

And even if the app works, the privacy concerns are dealt with and enough people download it, it may not be enough. Modellers have looked at the impact of a fully functional smartphone app and concluded that, on its own, it couldn't bring the reproduction rate - the much-discussed “R number” - down below 1. In other words, on average, every person with the virus would go on to infect more than one other person and the epidemic would take off again.

Also on rt.com Contact-tracing app will be ‘key part’ of UK government’s Covid-19 ‘surveillance programme’ – Johnson spokesman‘Test, track and trace’ is no panacea. Nonetheless, done quickly and effectively, a smartphone app could be an important part of ending the lockdown.

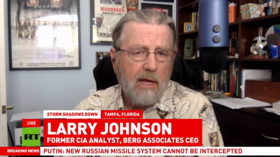

But if the NHS doesn't solve the multiple problems the app currently seems to face, probably by accepting the decentralised model put forward by Apple and Google, it is highly unlikely that enough people would use it to make it work as a track-and-trace option. It doesn't help that NHSX, the NHS's digital arm, is working with Palantir, the controversial data-processing giant that is part-funded by the CIA.

How long before the government gets the message?

If you like this story, share it with a friend!

The statements, views and opinions expressed in this column are solely those of the author and do not necessarily represent those of RT.