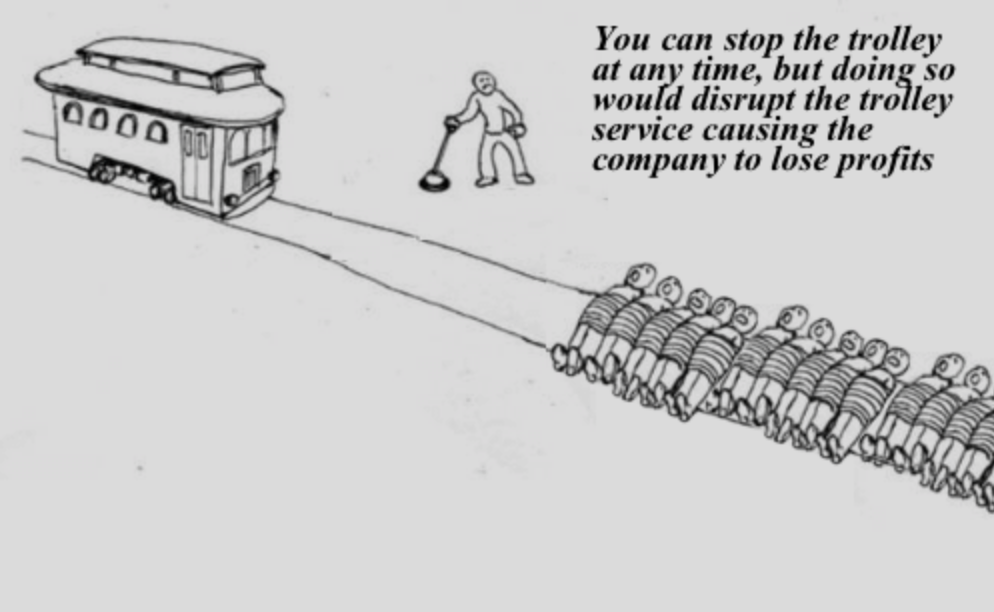

Twitter’s Covid-19 trolley problem: The censorship cost of its faulty conspiracy labels

Twitter has a trolley problem. Since its May 11 launch, its Covid-19 labeling system has misjudged content across its platform. How many wrongfully censored accounts are worth trading off for those rightly removed or labeled?

For those unaware, the trolley problem is a thought experiment in ethics that models a dilemma. One example of the dilemma would be turning a lever to move a train from a track destined to run over five individuals onto a different track, making it run over one. Who do you save? Is one life worth more than five? You see the issue.

Twitter’s new policy for Covid-19 is raising similar concerns. In its attempts to protect its users from misinformation, many more innocent voices are being silenced and labeled as heinous and conspiratorial, when, in fact, much of the time they’re not.

Also on rt.com Verification purge? Multiple Twitter accounts lose blue checks after interviewing ‘wrongthinkers’First, let’s take a look at the policy changes that came into effect on May 11, when the platform announced it would “take action based on three broad categories” in relation to tweets linked to coronavirus conspiracies. These categories were misleading information (labeled, possible removal), disputed claim (labeled, possible warning), and unverified claim (no action, possible future contextual attributions).

The label itself, “Get the facts about COVID-19,” directs users to a webpage consisting of a series of tweets debunking the theory that 5G causes coronavirus. Sounds simple enough, but, in reality, the social media giant is struggling immensely in implementing its new rules.

Even tweets by the de facto face of conspiracy, David Icke, are going unchallenged, while articles mentioning the theory are being labeled as complicit, even if the content itself entirely disputes the theory.

Although the technology that Twitter is using to enforce this system is not detailed anywhere online. One would suspect that it’s a form of natural language processing, in that it uses artificial intelligence and machine learning to decipher the meaning of tweets.

Also on rt.com We cannot allow Facebook and Twitter to use Covid-19 to launch their own coup d’étatFor example, the AI would be fed a series of words that it would learn the semantics behind – in this case, perhaps, ‘5G,’ ‘Covid-19,’ and ‘cause.’ Of course, this means that one article titled ‘5G causes Covid-19’ and another called ‘5G does not cause Covid-19’ could both be labeled as disputed or as misleading information because they include the ‘marked’ words.

This is the problem with the technology, and one of the reasons it’s uncertain whether AI will ever truly understand satire or irony. A study conducted last year, ‘Identifying nuances in fake news vs. satire: using semantic and linguistic cues’, found there’s a lot of work to be done in ensuring AI correctly understands the semantics of sentences. In instances where it could understand the satire better, researchers still had no idea how or why.

This being the case, should such a flawed system be deployed at all, on a platform with 330 million users? Without a guaranteed standard of correct labeling, it’s arguable that it’s not fit for purpose. Worse still, even with a higher standard of labeling, there are some who have highlighted other negative externalities created by the very fact of such systems existing in the first place.

Speaking with CNET, Hany Farid, a computer science professor at University of California, Berkeley, highlighted the problem: “Arguably, labeling incorrectly does more harm than not labeling, because then people come to rely on that, and they come to trust it.”

Also on rt.com Dystopian and disturbing: Big Tech censorship lumps together conspiracy loons and proper scientistsIndeed, a study by Massachusetts Institute of Technology found that, if news stories are unlabeled, it led to users being more likely to believe false information, describing this as the “implied truth effect.”

It’s difficult to measure, then, how worthwhile this new labeling system actually is. In its current state, many could be labeled as conspiracy-theory pushers, when, in fact, they’re innocent. But were it improved, users could still end up falling through the gaps and believing everything they read because of their reliance on the labeling mechanism.

Twitter hasn’t stated how many errors have been made so far, which would suggest it knows that the unacceptable number is anything greater than 0. By continuing down one track and refusing to switch the lever, it seems that the social media giant has solved its trolley problem by completely ignoring it. Full steam ahead.

Think your friends would be interested? Share this story!

The statements, views and opinions expressed in this column are solely those of the author and do not necessarily represent those of RT.