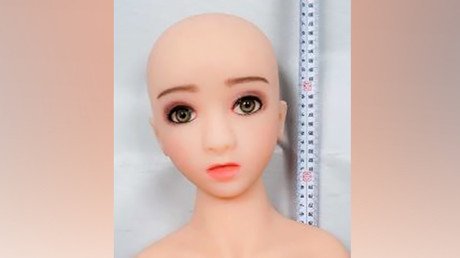

AI to flag child porn images... but can’t distinguish between nudes & desert landscapes

UK police want artificial intelligence to assume the upsetting task of intercepting child-abuse images on suspects’ phones and computers. However, existing software can’t distinguish between a naked body and a desert landscape.

London Metropolitan Police Head of Digital and Electronic Forensics Mark Stokes told The Telegraph that although the force, which examined 53,000 devices last year, already uses image recognition software, it still lacks the capability to spot indecent images and video.

While the technology appears to be successful in identifying guns, drugs and money on people’s computers and smartphones; it struggles to tell the difference between sand dunes and naked flesh.

“Sometimes it comes up with a desert and it thinks it’s an indecent image or pornography,” Stokes said, according to The Telegraph. “For some reason, lots of people have screensavers of deserts and it picks it up thinking it is skin color.”

He said that artificial intelligence (AI) has the potential to learn with the help of Silicon Valley experts, however, and could be able to accurately identify indecent images within the “next two to three years.”

The technology aims to relieve police officers of the burden of having to go through sometimes very disturbing content in their hunt for pedophiles.

“We have to grade indecent images for different sentencing, and that has to be done by human beings right now, but machine learning takes that away from humans,” he said. “You can imagine that doing that for year-on-year is very disturbing.”

READ MORE: ‘Thousands’ of porn images on Damian Green’s office computer, detective claims

The Met has come under further scrutiny over its use of image recognition software amid reports of plans to send sensitive data to cloud providers such as Amazon Web Services, Google and Microsoft.

However, the global internet giants are liable to security breaches, meaning thousands of indecent child images could be at risk of being leaked.