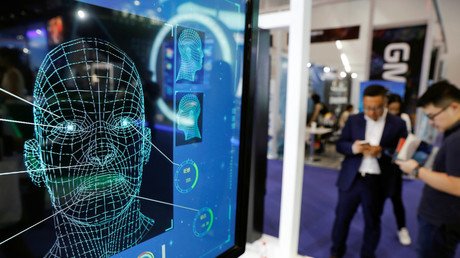

‘Minority Report’ now a reality? UK police to use AI in war on ‘pre-crime’

UK police are touting artificial intelligence as a solution to the problems of modern law enforcement, reassuring naysayers that the use of “pre-crime” algorithms will be restricted to offering “counseling” to likely offenders.

Suggesting that budget cuts have rendered mere human police incapable of doing their jobs without cybernetic help, project lead Iain Donnelly claims working with an AI system will allow the force to do more with less. He insists that the National Data Analytics Solution, as it’s called, will target only those individuals already known to have criminal tendencies, sniffing out likely offenders to divert them with therapeutic “interventions,” including individuals who are stopped and searched but never arrested or charged.

Donnelly claims the program is not designed to “pre-emptively arrest” anyone, but to provide “support from local health or social workers,” giving the example of an individual with a history of mental health problems being flagged as a likely violent offender, then contacted by social services. Given that a violent mental case would almost certainly react negatively to being contacted out of nowhere by a mysterious social worker – and that a history of mental health problems is not in itself criminal – Donnelly was wise to end his example there.

“Interventions” will be offered only to potential offenders, but the NDAS claims to be able to pick their victims as well. It combines statistics from multiple agencies with AI machine learning to assess an individual’s risk of committing or being victimized by gun or knife crime, or “falling victim to modern slavery,” according to documents obtained by the New Scientist.

The NDAS isolated almost 1,400 “indicators” of future criminality in a population sample of five million, analyzing more than a terabyte’s worth of data from local and national police databases, and zeroed in on 30 particularly effective markers, including the number of crimes committed by people in one’s social group, the number of crimes committed “with the help of others,” and an individual’s age at first offense. This data was then used to predict when an individual already known to the police was planning to re-offend, and assigned a “risk score” accordingly.

West Midlands Police hope to begin generating predictions with the NDAS in early 2019, working hand-in-glove with the Information Commissioner’s Office to ensure privacy regulations are "respected." The program’s designers hope to see it adopted throughout the UK; London’s Metropolitan Police and Greater Manchester Police are also reportedly on board.

The Turing Institute found “serious ethical issues” with the NDAS, stating the program fails to fully recognize “important issues,” despite its good intentions, and that “inaccurate prediction is a concern.” Given that predictive policing is based on existing patterns of stops, arrests, and convictions – including in individuals never found guilty or even charged with a crime – it can only “reinforce bias,” since it is incapable of expanding the pool of suspects beyond those tagged as suspicious by human officers.

A kinder, gentler Minority Report may sound harmless, but predictive policing algorithms like the US’s PredPol have already demonstrated an inherent racial and class bias, creating “feedback loops” that amplify officers’ own prejudices. Given the biases many believe are hard-coded into the criminal justice system, it’s difficult to see how an AI could completely break free of its programmers’ preconceptions.

Like this story? Share it with a friend!