Scientists at MIT have revealed how they trained an artificial robot to become a “psychopath” by only showing it captions from disturbing images depicting gruesome deaths posted on Reddit.

The team from the Massachusetts Institute of Technology (MIT) named the world’s first psycho robot Norman, after the central character in Hitchcock’s 1960 movie ‘Psycho.’ As part of their experiment they only exposed Norman to a continuous stream of captions from violent images on an unnamed “infamous” subReddit page to see if it would alter the bot’s AI.

READ MORE: Racist & sexist AI bots could deny you job, insurance & loans – tech experts

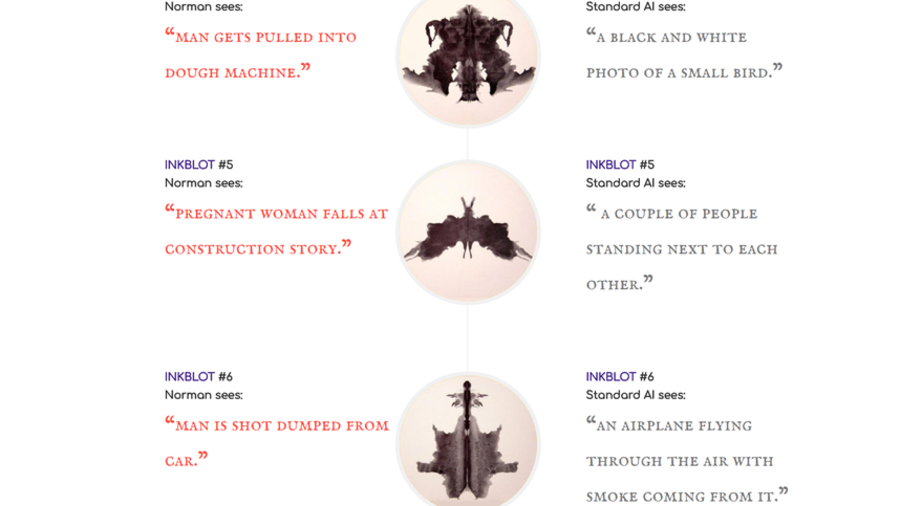

After the gruesome exposure, Norman was subjected to the Rorschach inkblot test - a psychological exam from 1921 which tests what subjects see when they look at nondescript inkblots. As part of the test the answers given by participants are then psychologically analyzed in order to measure potential thought disorders.

The team found Norman’s interpretations of the imagery - which included electrocutions, speeding car deaths and murder - to be in line with a psychotic thought process. A standard AI who hadn’t been subjected to the Reddit posts saw umbrellas, wedding cakes and flowers.

In one example, the normal AI reported seeing “a black and white photo of a baseball glove,” while Norman saw “man is murdered by machine gun in broad daylight.” Another test saw the regular bot report “a black and white photo of a small bird,” when Norman said it shows the moment a “man gets pulled into dough machine”.

The scientists say they developed a “deep learning method” to train the AI to produce descriptions of the images in writing. MIT team members Pinar Yanardag, Manuel Cebrian, and Iyad Rahwan say the study proved their theory that the data used to teach a machine learning algorithm can greatly influence its behavior.

READ MORE: ‘Trending’ no more: Facebook removing controversial news feature

They say the results of the experiment show that when algorithms are accused of being “biased or unfair” (like Facebook or Google) "the culprit is often not the algorithm itself but the biased data that was fed into it."

Like this story? Share it with a friend!