Stuxnet evolution: NSA input turned stealth weapon into internet-roaming spyware

Forensic analysis of the Stuxnet cyber-warfare operation reveals how an initial version of the virus, which was ‘a display of absolute cyber-power’ evolved into a simpler self-replicating and home-reporting malware that was eventually detected.

There were two distinct versions of Stuxnet, the computer virus that is widely believed to have been developed by the US and Israel to hamper uranium enrichment at Iran’s Natanz nuclear facility. The people behind it likely underwent a shift of goals sometime along the cyber-warfare campaign, which involved bringing in new IT people with a whole new arrange of secret knowledge.

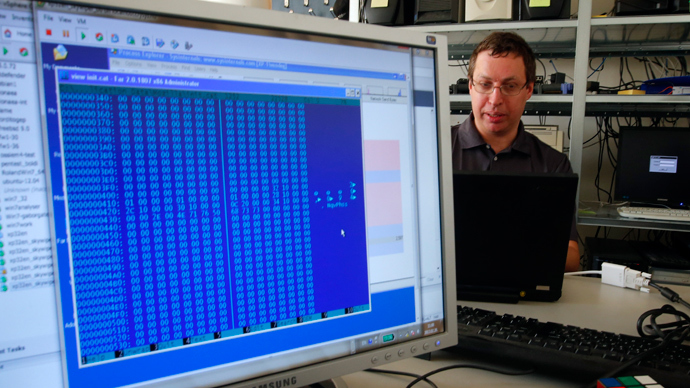

The news comes from Ralph Langner, an independent German cyber-security expert specializing in control systems, who has been heavily involved in the study of the Stuxnet and the damage it caused, and shared his conclusions with Foreign Policy magazine.

Speed bug & pressure bug

The earlier version of the virus code was submitted to a computer security site back in 2007, but it was years later when it was identified as one by experts dissecting later versions of Stuxnet. The old Stuxnet targeted Iranian uranium enrichment centrifuges in a different way and was also much more difficult to detect. But it didn’t have the virulence of its descendant, Langner writes.

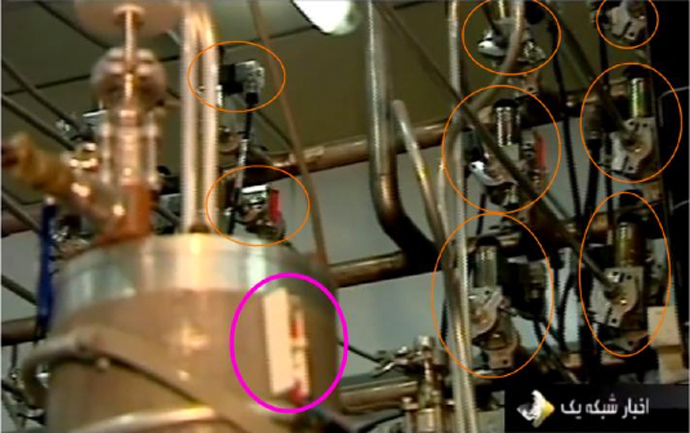

Both versions were designed to take industrial controllers, the digital tools regulating the operation of the centrifuge cascades. The latter virus overloaded them by changing the speed of rotors and making them spin in a highly erosive manner, a routine that could be perceivably detected by simple observation, should Natanz engineers remove protective headsets from the centrifuges.

Unlike it, the earlier code acted in a more elaborate way utilizing the technological peculiarity of the Natanz facility. Iran’s centrifuges there are an altered copy of an obsolete European design. Since Iran doesn’t have access to precision manufacturing of centrifuge details, it has to operate the ones it produces at a mode which makes them prone to malfunction.

A special

protection system shuts off those malfunctioning centrifuges,

which are then replaced while the others are still working. And

if several centrifuges in a single group of 164 machines get

kicked out, the same system vents off excessive pressure.

The earlier Stuxnet took control of that protection system and tricked it into building up the pressure just enough to speed up erosion of the centrifuges, but not allowing a catastrophic failure.

Researchers initially believed that the two methods of attack were meant to complement each other, but this was not the case, Langner says. Rather it points to “changing priorities that most likely were accompanied by a change in stakeholders.”

Change of goals

Another sign of the shift is the difference in infection methods of the two versions. The earlier Stuxnet had to be manually installed to controller systems at the facility by a knowing agent, while the latter version was designed to self-replicate and spread through USB-drives and laptops of unwitting engineers.

The code also used a number of previously unknown vulnerabilities in a Windows operating system – so-called ground zero exploits – and used false digital certificates to pose as valid software.

“The development of the overpressure attack can be viewed as the work of an in-group of top-notch industrial control system security experts and coders who lived in an exotic ecosystem quite remote from standard IT security,” Langner explains.

“The overspeed attacks point to the circle widening and acquiring a new center of gravity. If Stuxnet is American-built – and, according to published reports, it most certainly is – then there is only one logical location for this center of gravity: Fort Meade, Maryland, the home of the National Security Agency,” he adds.

Costly disguise

Both version of the virus were powerful enough to trigger a catastrophic failure, damaging hundreds of centrifuges in a single incident, but instead opted for slow, covert sabotage.

“The attackers were in a position where they could have broken the victim's neck, but they chose continuous periodical choking instead,” Langner says.

This self-restraint actually boosted considerably the cost of developing the malware.

“I estimate that well over 50 percent of Stuxnet's development cost went into efforts to hide the attack, with the bulk of that cost dedicated to the overpressure attack which represents the ultimate in disguise – at the cost of having to build a fully-functional mockup IR-1 centrifuge cascade operating with real uranium hexafluoride,” he explained.

In the end the strategy paid off. Langer believes that the damage caused by the virus stalled Iran’s uranium enrichment operation by about two years – longer than what a massive one-time crippling of the facility would have caused.

Deliberate exposure?

Langner challenges the common narrative that Stuxnet ‘escaped’ the Natanz facility by accident to be eventually detected and studied by cyber-security experts. He cites the tools in the virus, which allowed it to send reports from infected computers to command-and-control servers.

“It appears that the attackers were clearly anticipating (and accepting) a spread to noncombatant systems and were quite eager to monitor that spread closely,” he says. “This monitoring would eventually deliver information on contractors working at Natanz, their other clients, and maybe even clandestine nuclear facilities in Iran.”

He adds that Stuxnet exposure had a side benefit for the United States in terms of reputational gains.

“If another country – maybe even an adversary – had been first in demonstrating proficiency in the digital domain, it would have been nothing short of another ‘Sputnik’ moment in US history.”