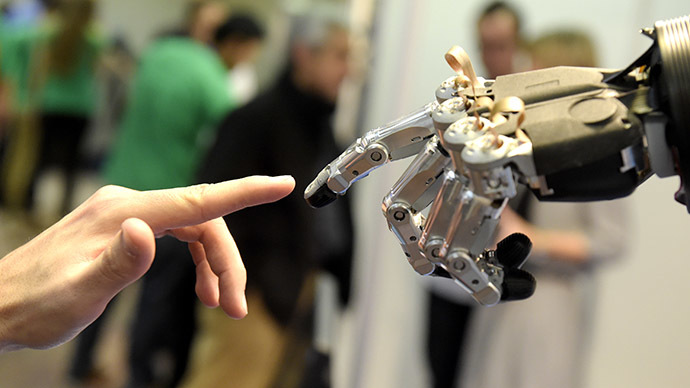

Scientists join Elon Musk & Stephen Hawking, warn of dangerous AI

Hundreds of leading scientists and technologists have joined Stephen Hawking and Elon Musk in warning of the potential dangers of sophisticated artificial intelligence, signing an open letter calling for research on how to avoid harming humanity.

The open letter, drafted by the Future of Life Institute and signed by hundreds of academics and technologists, calls on the artificial intelligence science community to not only invest in research into making good decisions and plans for the future, but to also thoroughly check how those advances might affect society.

The letter’s authors recognize the remarkable successes in “speech recognition, image classification, autonomous vehicles, machine translation, legged location and question-answering systems,” and argue that it is not unfathomable that the research may lead to the eradication of disease and poverty. But they insisted that “our AI systems must do what we want them to do” and laid out research objectives that will “help maximize the societal benefit of AI.”

The document of research priorities says that future AI could potentially impact society in areas such as computer security, economics, law, and philosophy, and highlight many concerns for communities. For instance, scientists suggested that AI should be developed to analyze how workers – and their wages – would be affected if parts of the economy become automated.

They also suggested looking into various issues involving AI that need to be considered before breakthroughs are made that are considered beneficial to society: Can lethal autonomous weapons be made to comply with humanitarian law? How will AI systems be constrained over privacy rights when it comes to obtaining data from surveillance cameras, phone lines, and emails?

READ MORE: ‘Summoning the devil’: Elon Musk warns against artificial intelligence

Elon Musk, the CEO of Tesla and SpaceX, has repeatedly voiced concerns about artificial intelligence, saying it could be more dangerous than nuclear weapons.

Stephen Hawking, Elon Musk and others call for research to avoid dangers of AI http://t.co/t25P2K5Dxjpic.twitter.com/eSXBHEdemN

— IndyTech (@IndyTech) January 12, 2015

“I’m increasingly inclined to think there should be some regulatory oversight maybe at the national and international level, just to make sure that we don’t do something very foolish,” he said.

Musk has invested in companies developing AI, and he advises “to keep an eye on them.”

Additionally, a group of scholars from Oxford University wrote in a blog post last year that “when a machine is ‘wrong,' it can be wrong in a far more dramatic way, with more unpredictable outcomes, than a human could. Simple algorithms should be extremely predictable, but can make bizarre decisions in 'unusual' circumstances."

READ MORE: Stephen Hawking: Artificial Intelligence could spell end of human race

World famous physicist Stephen Hawking, who relies on a form of artificial intelligence to communicate, told the BBC that if technology could match human capabilities, “it would take off on its own, and re-design itself at an ever increasing rate.”

He also said that due to biological limitations, there would be no way that humans could match the speed of the development of technology.

“Humans, who are limited by slow biological evolution, couldn't compete and would be superseded,” he said. “The development of full artificial intelligence could spell the end of the human race.”

The document is signed by many representatives from Facebook, Google, Skype, and artificial intelligence companies DeepMind and Vicarious. Academics from many of the world’s most prestigious universities have also signed it, including those from Cambridge, Oxford, Harvard, Stanford, and MIT.